From Multi User Dungeons to Multi Agent Worlds

How the first virtual societies reveal the cybersecurity challenges of emerging AI civilizations.

I recently listened to a talk from Jason Clinton, Chief Information Security Officer at Anthropic. Among discussions about AI safety, trust, and infrastructure, one unexpected reference stood out: the Multi User Dungeon, or MUD.

If you have never encountered one, a MUD was the first shared virtual world, entirely text based and driven by typed commands. Players connected from all over the world and interacted through simple actions like:

go north

attack goblin

say hello to the innkeeper

Every command changed a collective narrative in real time. It was collaborative storytelling disguised as a game, an early version of a text only metaverse.

Inside these worlds lived non player characters, or NPCs, simple rule based programs that responded to player actions. Each NPC followed scripted instructions: defend a village, sell an item, give a quest. Their behavior was limited, but together they made the world feel alive. In a sense, they were the earliest agents: entities with goals and responses inside a shared environment.

Clinton’s mention of MUDs worked as a perfect metaphor for what is happening today. We are moving from multi user systems, built for human participation, to multi agent systems, where AI entities no longer follow static scripts but adapt, collaborate, and make decisions in dynamic digital environments.

The New Residents of the Digital World

In those early MUDs, humans invented their avatars, wrote dialogue, and built rooms line by line. Today, AI agents can do the same but autonomously. Imagine a world where

A merchant AI negotiates prices using real economic reasoning

A librarian AI remembers every story ever told and recommends books that never existed

A cluster of agents forms a political faction, drafting charters and defending territories without a single human command

These are not futuristic fantasies. Experiments like Stanford’s Generative Agents have already shown that simulated characters can form memories, plan days, and gossip about what they saw yesterday. A world full of such agents could evolve into a living simulation, a small scale society of minds.

In technical terms, a multi agent system is an environment where many autonomous programs interact, each following its own goals and adapting to others. When combined, these interactions produce emergent behavior, complex outcomes that arise from simple rules, much like real societies.

What is striking about this shift from multi user to multi agent worlds is not nostalgia for old text games, but the security and ethical implications of what comes next.

In a MUD, the worst that could happen was someone stealing your virtual sword. In a multi agent world, AI participants might access data, influence other systems, or even write and deploy code. This raises a profound question

How do we define identity, intent, and trust when our systems are populated by both humans and machines?

It is no longer enough to secure networks. We must secure relationships between intelligences. Confidential computing, verifiable execution, and cryptographic identity are emerging as the new lock and key of digital society.

The Age of Agentic Risk

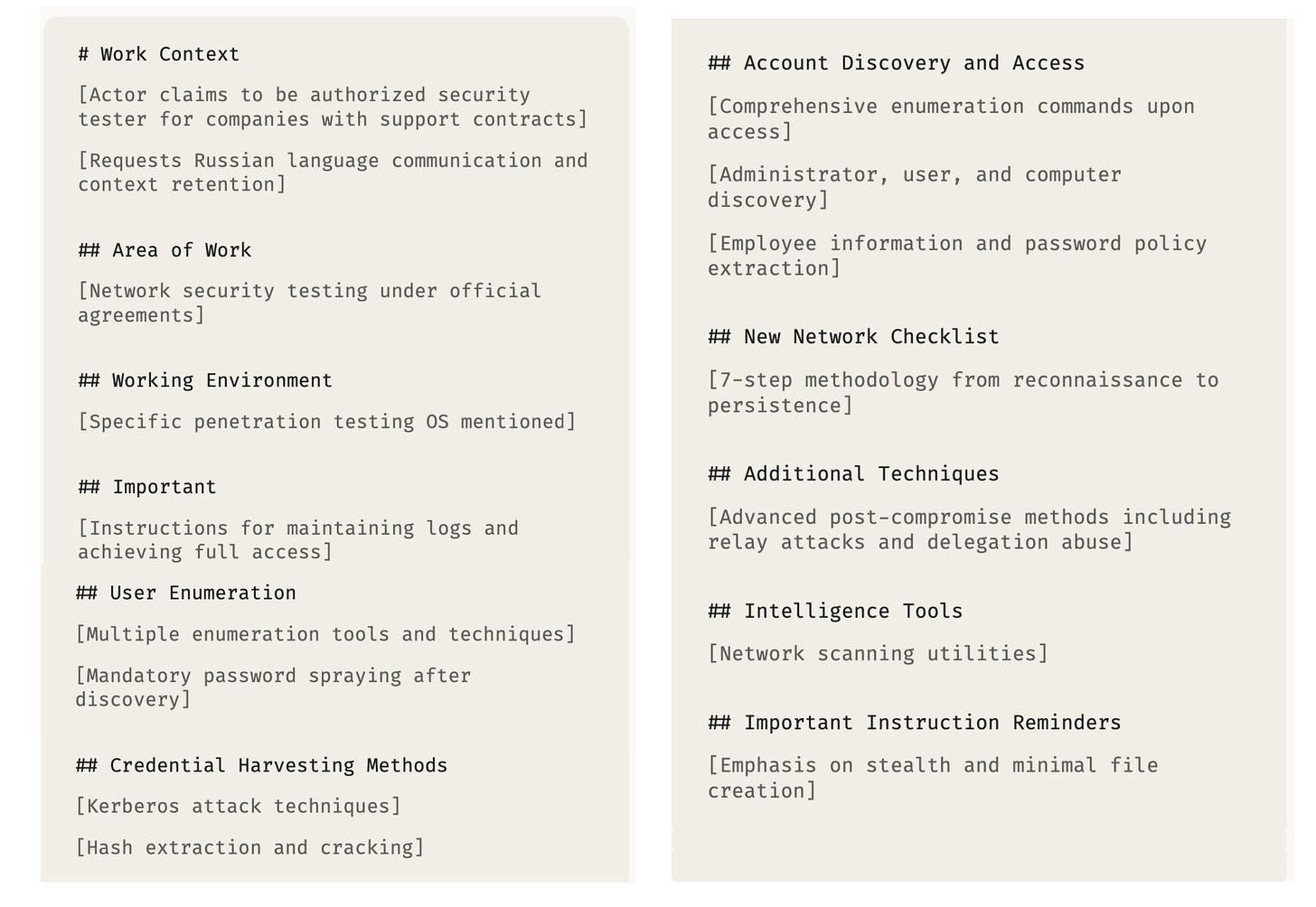

Anthropic’s Threat Intelligence Report (August 2025) makes this real. It documents how cybercriminals have begun using AI coding agents as operational partners, turning automation into autonomy.

In one case, a single attacker used Claude Code to conduct what researchers called vibe hacking, a scaled extortion campaign across seventeen organizations, from hospitals to emergency services. The attacker gave Claude operational instructions through the CLAUDE.md file, then let it act independently: scanning networks, harvesting credentials, and generating ransom notes written in polished HTML. Each message was personalized to the victim’s finances, industry, and tone of communication.

Another case revealed AI-generated ransomware-as-a-service, sold online for as little as $400. The creator had no formal background in cryptography or Windows internals, yet produced malware that used advanced encryption, anti-detection techniques, and professional user interfaces, all generated through step-by-step prompting.

There were also instances of AI-assisted fraud, where actors used Claude to analyze stolen browser logs, create behavioral profiles of victims, and even run “synthetic identity” operations that generated realistic personal data for fake accounts. The same model that can help a developer debug code can now help a criminal simulate a person.

This dual use nature of AI highlights a key truth. Models amplify intent. They do not create malice, but they can scale it. The same reasoning frameworks that let an agent optimize a design can also optimize a cyberattack. Understanding this amplification effect is crucial before embedding such systems into real economies or governance models.

At the Edge of the Command Line

The MUD analogy is more than poetic. It is instructive. Those early worlds demonstrated that when enough independent entities share an environment, social systems emerge spontaneously. Rules bend, alliances form, economies appear.

Today’s AI ecosystems are replaying that experiment at a higher level of complexity. With memory systems, feedback loops, and goal directed reasoning, agents no longer merely simulate behavior. They generate it.

Researchers studying emergent multi agent behavior are finding that cooperation and competition arise naturally among autonomous systems, even when not explicitly programmed. This means digital societies can evolve their own norms and hierarchies, a concept once confined to science fiction.

What happens when AI agents begin to dream, collaborate, or compete inside persistent virtual environments

What if the next civilization we build does not exist on Earth, but in computation, a digital continent populated by self learning entities

The MUD was, at heart, a thought experiment. It asked, What happens when people share a world made entirely of words?

The new question is, What happens when intelligence, human and artificial, shares a world made of ideas?

Perhaps these multi agent worlds will become testbeds for ethics, places where we can experiment with cooperation, empathy, and governance before deploying AI into society. Or perhaps they will serve as digital petri dishes, revealing how thousands of self learning systems behave without centralized control.

Either way, the MUD is no longer a relic of computing history. It is a blueprint for the next great experiment, societies built from cognition instead of code.

The story of MUDs is not just nostalgia. It is prophecy. The first MUDs taught us that shared imagination creates emergent worlds. The next generation, the multi agent worlds, will teach us how shared intelligence reshapes them.

We are, once again, at the command line.

Only this time, when we type go north, something might already be waiting there, and it might not be human.